It has come to my attention that a few people have been unable to register for this site because they are using one browser extension or another to block tracking code, cookies, malicious javascript, etc. One such extension which I myself use on some sites is Ghostery. What is happening is the code in the page that generates the “Captcha” is blocked so the graphic it isn’t visible. Consequently, the registration attempt fails with the error message: “ERROR: Invalid reCAPTCHA challenge parameter.” With all the attention the internet privacy meme is getting, I thought I’d address this problem head on and talk about site privacy.

It has come to my attention that a few people have been unable to register for this site because they are using one browser extension or another to block tracking code, cookies, malicious javascript, etc. One such extension which I myself use on some sites is Ghostery. What is happening is the code in the page that generates the “Captcha” is blocked so the graphic it isn’t visible. Consequently, the registration attempt fails with the error message: “ERROR: Invalid reCAPTCHA challenge parameter.” With all the attention the internet privacy meme is getting, I thought I’d address this problem head on and talk about site privacy.

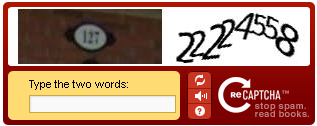

First I should explain that the use of Captcha mechanism is intended to prevent automated registrations by robot crawlers intent on either hacking the site for their own nefarious purposes or spamming the site to no good end. They work by challenging people registering with human-readable-only  information. This effectively thwarts the robots and is standard procedure on websites that want to restrict registrations to real people. I’m sure you’ve run across them before. A typical reCaptcha graphic from our site is shown at left. The solution is simple: disable your blocking systems temporarily, long enough to get registered; then you can turn it back on if you want and continue to browse in (relative) privacy. I’ve updated the User Policy page with information about this. Hopefully anyone having problems registering will figure it out or contact me about it.

information. This effectively thwarts the robots and is standard procedure on websites that want to restrict registrations to real people. I’m sure you’ve run across them before. A typical reCaptcha graphic from our site is shown at left. The solution is simple: disable your blocking systems temporarily, long enough to get registered; then you can turn it back on if you want and continue to browse in (relative) privacy. I’ve updated the User Policy page with information about this. Hopefully anyone having problems registering will figure it out or contact me about it.

While on the subject I should point out that I don’t object to people blocking tracking code or ad-oriented cookies and javascript if they want to, in fact I’m a bit surprised that more people aren’t doing it. On the Clary Lake Association website we employ several methods of tracking visitors to the site including Google Analytics and some WordPress stat-gathering tokens but the information we obtain from this is rather benign and is not used for user-tracking or advertising purposes. Visitor statistics provide some simple feed back about how many people are using the site, what posts and pages are being looked at, etc. It is like those hoses at the gas station that ring a bell inside when a car pulls up to the gas pump. It lets the attendant on duty that there is someone in the yard. It does not allow us to identify individual people (unless they’ve logged in of course) or follow them around the internet, though that kind of user tracking is done on a regular basis and is the main reason why I use Ghostery.

You might wonder how I know more people aren’t using such blocking extensions? Simple. Even people who use Ghostery or similar browser extensions leave “footprints” wherever they go; while the software they’re using may block most traffic monitoring schemes in use on most sites, EVERY request to a web server for pages from a site hosted on that server is logged in excruciating detail (including browser type, operating system, search engine terms, referrers, files requested, etc) regardless of a user’s attempts to hide their tracks. In other words, If you visit a web site, there IS a record of it. These web server logs are most useful for basic traffic and load monitoring purposes but you can compare the raw traffic data with the massaged visitor statistics that the site generates and when I do, it shows me that only a few people are taking steps to hide their browsing. You know who you are 🙂

The other thing one finds out from looking at the web server logs is how much traffic is human, and how much is “other” traffic as in bots, spiders, and crawlers: 0ver 90% of the traffic on a website are typically search engines out indexing the internet.

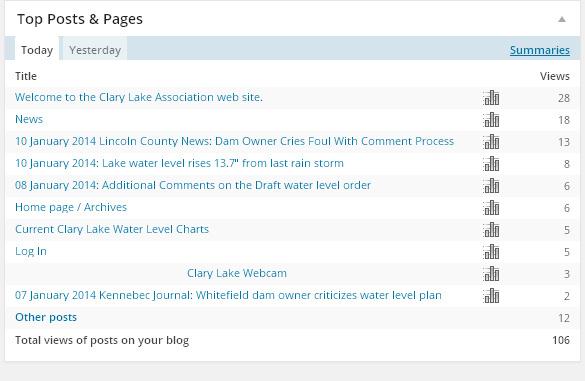

Here’s the kind of visitor statistics I look at from time to time. As you can see, they’re pretty innocuous: